We can follow both of those assumptions by representing the speech spectrum using bands spaced according to equivalent rectangular bandwidth (ERB). In other words, (1) the speech spectrum tends not to have sharp discontinuities, and (2) the human auditory system perceives low frequencies with higher resolution than high frequencies. we perceive it with a nonlinear frequency resolution, corresponding to the human ear’s auditory filters (a.k.a.the general shape of the speech spectrum (a.k.a.We can do that by making the following assumptions: If we want good speech quality, and we want our algorithm to run in real time on a CPU without instantly draining the battery, then we need to find a way to simplify the problem. For real-time applications on power-constrained devices, however, we can't realistically expect to have a very good phase estimator. This is the no-compromise route taken by PoCoNet, which can get around the added complexity because it’s optimized to run on a GPU. Where do we go from here? If we want to improve quality, then we could also estimate phase. If we want to enhance all frequencies up to 20 kHz (the upper limit of human hearing), then our neural network will have to estimate 400 amplitudes, which is very computationally expensive. Assuming we use 20-millisecond windows, the STFT bins will be spaced 50 Hz apart. In other words, the way in which the phase changes over time still does matter.Īnother issue for real-time, power-constrained operation is the number of frequency bins whose amplitudes we need to estimate.

While the ear is essentially insensitive to the absolute phase, what we perceive here is the inconsistency of the phase across frames. This is due to the error in the phase, which we took from the noisy signal. The noise is still audible as a form of roughness in the speech.

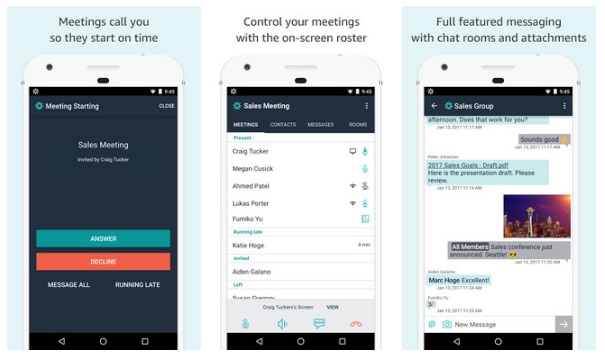

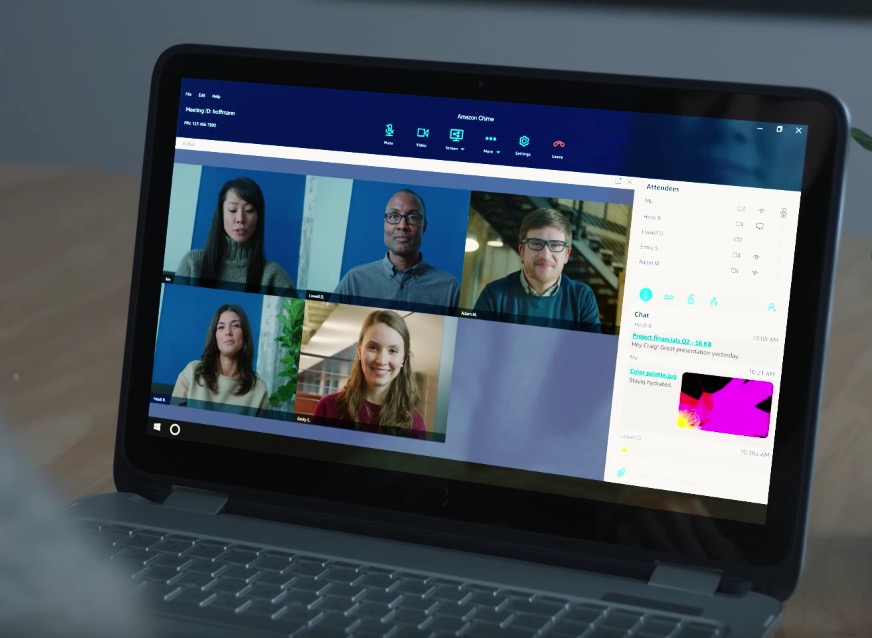

We start from the clean speech sample below:Ĭertainly not bad, but also far from perfect. Let's consider a simple synthetic example. Speech enhancement and STFTīefore getting into any deep learning, let's look at the job we'll be asking our machine learning model to perform. Rather than have a deep neural network (DNN) do all the work, PercepNet tries to have it do as little work as possible. Like most recent speech enhancement algorithms, PercepNet uses deep learning, but it applies it in a different way. For more details, you can also refer to our Interspeech paper.ĭespite operating in real time, with low complexity, PercepNet can still provide state-of-the-art speech enhancement. In this post, we'll look into the principles that make PercepNet work. This makes it usable in cellphones and other power-constrained devices.Īt Interspeech 2020, PercepNet finished second in its category (real-time processing) in the Deep Noise Suppression Challenge, despite using only 4% of a CPU core, while another Amazon Chime algorithm, PoCoNet, finished first in the offline-processing category. It is designed to suppress noise and reverberation in the speech signal, in real time, without using too many CPU cycles.

PercepNet is one of the core technologies of Amazon Chime's Voice Focus feature.

0 kommentar(er)

0 kommentar(er)